This week, from December 9th to 11th, 2024, CERN played host to the NGT Algorithm Workshop – Lattice QCD at the large scale on exascale computing facilities, a dynamic three-day event bringing together leading experts in physics and computing. Part of the Next Generation Triggers (NGT) project and embedded within Task 1.5: New Computing Strategies for Data Modeling and Interpretation, the workshop marked an exciting step forward in exploring the interplay between cutting-edge scientific theories and groundbreaking computational technologies.

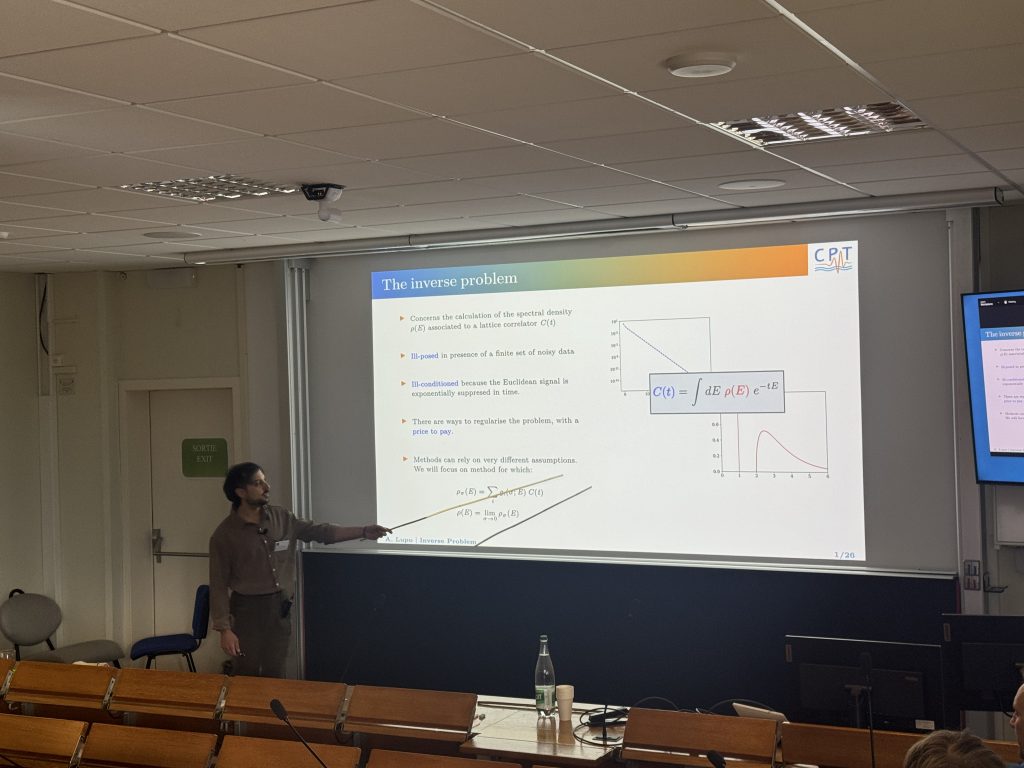

Lattice Quantum Field Theory may sound like a mouthful, but it’s a game-changing method for studying the universe’s most fundamental forces. By simulating physics on a grid (or lattice), researchers can investigate phenomena that are otherwise impossible to capture, such as how quarks and gluons—tiny particles that make up protons and neutrons—interact. With advances in algorithms and computing power, these simulations are becoming increasingly precise, offering exciting opportunities to uncover new physics.

The workshop kicked off with a focus on variance reduction techniques and machine learning applications in lattice QCD. Discussions ranged from block Lanczos methods and multigrid low-mode averaging to multi-level sampling and variance reduction for vector current correlators. The day concluded with an exciting look at generative models and their transformative potential for lattice simulations. Day two zoomed in on novel sampling algorithms, tackling challenges like topological freezing and fine lattice spacings. Talks highlighted techniques such as parallel tempered metadynamics, normalizing flows for gauge theories, and nested sampling, offering new pathways for making complex simulations both efficient and computationally feasible.

On the final day, the spotlight turned to next-generation computing architectures and their role in advancing lattice QCD. Highlights included advancements in the Grid and QUDA frameworks, the integration of openQ*D with QUDA, and discussions on adapting simulations to heterogeneous platforms like GPUs. The event wrapped up with a collaborative discussion on the future of computational tools for LQFT, leaving participants energized and inspired by the possibilities ahead.

The workshop provided a platform for researchers to share insights, explore cutting-edge techniques, and foster collaborations across disciplines. One key theme of the workshop was how AI and machine learning are transforming physics research. These tools are no longer limited to commercial applications like image recognition; they’re now helping physicists crunch vast amounts of data and optimize complex simulations. Participants discussed how algorithms can make LQFT simulations faster, smarter, and more efficient—opening new doors for discovery.

In reflecting on the success of the workshop, Jacob Finkenrath, Task Leader for 1.5 and one of the organizers of the event, highlighted the depth and diversity of ideas shared over the three days. He emphasized the importance of fostering collaboration and innovation to advance simulations in lattice QCD. “We had three days of very interesting talks from young and leading researchers on novel algorithms, with many innovative and novel ideas to push simulations in our field, lattice QCD, into the era of exascale computing,” he noted. He also praised the atmosphere of the event, describing it as “very good and relaxed,” which created the perfect space for stimulating discussions among participants.

Want to dive deeper into the topics discussed? Check out the full list of presentations here.